Visual realism:

Exploring effects on memory, language production, comprehension, and preference

Proefschrift ter verkrijging van de graad van doctor

aan Tilburg University

op gezag van de rector magnificus,

prof. dr. E. H. L. Aarts,

in het openbaar te verdedigen ten overstaan van een door het college voor promoties aangewezen commissie

in de aula van de Universiteit

op woensdag 10 februari 2016 om 14:15 uur

door Hans Gerard Wilhelmus Westerbeek,

geboren op 8 april 1985 te Breda.

Promotores

Prof. Dr. A. A. Maes

Prof. Dr. M. G. J. Swerts

Copromotor

Dr. M. A. A. van Amelsvoort

Overige leden van de promotiecommissie

Prof. Dr. E. J. Krahmer

Prof. Dr. E. de Vries

Prof. Dr. B. Indurkhya

Dr. C. M. J. van Hooijdonk

Dr. R. Cozijn

On the cover

The cover photo shows the work LUCHTMATERIALISATIE (SKYMATERIALIZATION) by Liza Voetman. SKYMATERIALIZATION is an attempt to objectify observation. For this materialization, Voetman joined forces with two paint suppliers, aiming to objectively capture the sky’s color as seen in southern direction from N51° 34.402’ E 005° 05.363’, at precisely 18:50, on 31 consecutive days in March 2015. Using different techniques, the paint suppliers measured and blended the sky’s colors to allow Voetman to depict the color on wooden panels. SKYMATERIALIZATION demonstrates the full scope of this research.

(Photo: Hans Westerbeek)

Table of contents

Summary

A ubiquitous part of everyday communication takes place via pictures. For example, people use pictures to show what things look like, how things work, or what can be dangerous. Some of these pictures are less realistic than others: They distort reality by for instance violating what the represented reality looks like, or they present a simplified, schematized version of reality. This dissertation aims to explore whether such distortions of reality in representational pictures influence the way people describe, remember, understand, and learn from these pictures.

This dissertation explores the influence of visual realism from various fields of research, including memory, language production, route descriptions, educational psychology, and information design. In a series of experimental studies, in which participants perceive and cognitively process pictures, effects of visual realism on different kinds of processing are explored and described.

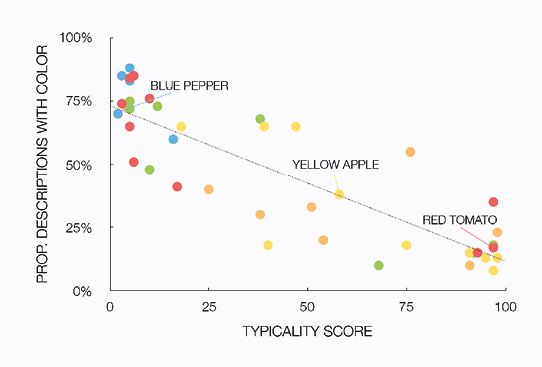

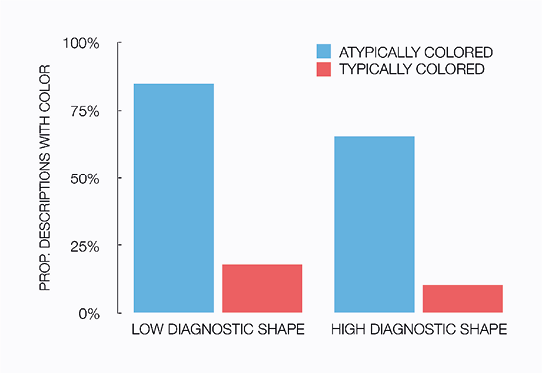

Chapter 1 introduces visual realism in pictures, and hypothesizes how deviations from visual realism may influence cognitive processing. Chapter 2 studies effects of visual realism on object recognition and memory, and the focus is on atypically colored pictures of objects. It is found that color atypicality affects object recognition, and consecutively atypically colored objects are remembered better than typically colored ones under certain circumstances. Chapter 3 introduces similar atypically colored objects in a language production task. Color atypicality is found to have large effects on the production of referring expressions: People mention atypical colors more frequently than typical colors when describing objects in visual context. Chapter 4 also concerns language production, but now in the context of producing route descriptions from maps. Maps are often either visually detailed (i.e., aerial photographs) or consist of schematic graphics, and this difference in visual realism is found to affect the both form and content of route descriptions that people produce. Chapter 5 further explores the differences between photographs and schematic graphics, but now in the context of educational design. Secondary school students are found to benefit from schematization, but experimental results suggest that this benefit is related to schematic pictures employing visual emphasis in pictures, rather than the leaving out of irrelevant visual detail. Finally, Chapter 6 presents a case study on visualizing football statistics, and it explores effects of visually realistic elements and natural metaphors on how people use (and prefer to use) an information display. The final chapter, Chapter 7, presents the conclusions drawn in this dissertation, identifies connections between the experiments in Chapters 2 through 6, discusses limitations of this dissertation and suggestions for future work, and it summarizes methodological and practical implications.

Taken together, the experiments reported in this dissertation present effects of different deviations from visual realism, using stimuli diverging in complexity and usage context, and situated in multiple fields of study. Visual realism is found to have an effect in studies in recognition, memory, language production, learning and comprehension, and information design. All studies in this dissertation, reported in Chapters 2 to 6, offer support for the hypothesis that deviations from visual realism in representational pictures influence how people process these pictures. Deviations of visual realism in pictures, either in terms of color atypicality or as schematization, are found to affect a number of human reactions towards these pictures, and the experiments reported in this dissertation suggest that pictures that deviate from visual realism are processed differently than realistic counterparts.

Table of contents

Chapter 1

General introduction

Human interaction is an intricate phenomenon that takes place not only via words, sounds, prosody, and facial expressions, but also via pictures (e.g., Tversky, 2000, 2011). In fact, using pictures to convey messages is ubiquitous in everyday communication. For example, manuals present pictures to show how things work or how they should be constructed (e.g., “insert plug A into connector B”), traffic signs and warnings can communicate where dangers are (“take care not to trip when boarding the train”), advertising often revolves around pictures (“see how sleek and elegant this new smartphone is”), and the news visualizes events by for example plotting statistics in graphs (“the national team scored five goals in yesterday’s game, and the opposition scored once”).

Pictures serve many functions (e.g., Carney & Levin, 2002; Pettersson, 1998, 2013; Rieber, 2000; Tversky, 2011). Pictures may have an aesthetic role, intended to elicit emotions in the people perceiving them, such as to like a new smartphone. Pictures can also be meant to affect behavior, by for example showing people where to insert a plug, what smartphone to buy, or where they might trip or fall if they do not pay attention. Some pictures have a primary function to visualize informational content, for example showing how a sports match played out. The focus in this dissertation is on the representational function of pictures. Representational pictures are pictures that represent things in the real world. In other words, they depict, as if they are a pictorial variant of descriptions (Carney & Levin, 2002; Pettersson, 2013; Tversky, 2001; 2011).

In depicting things and their features, representational pictures in visual communication often distort the reality they represent (Tversky, 2011): Some pictures are less realistic than others. The sign warning us not to trip and fall does not show the exact same train that we are exiting, for example. In the literature, visual realism is defined in terms of likeness: The less realistic a picture is, the fewer features of the represented reality are truthfully encompassed by the picture (e.g., Dwyer, 1976; Pierroutsakos & DeLoache, 2003; Rieber, 2000). In this dissertation, visual realism is defined as the degree to which a picture is visually similar to the reality it represents. Thus, according to this definition, color photographs are in principle more visually realistic than black and white pictures, which are in turn more realistic than schematic line drawings, for example. Also, pictures that show objects in strange or unlikely colors are less realistic than true-color counterparts.

The individual studies in this dissertation deal with different visual characteristics of pictures that affect the degree to which pictures are visually realistic. One concerns characteristics of pictures that are incongruent with the depicted content in reality. A case in point is the use of atypical colors. Another way in which pictures can be less visually realistic concerns schematization, where certain characteristics of reality are left out of the picture, and others are highlighted.

These two ways in which pictures can violate visual realism are illustrated in Figure 1.1. Figure 1.1a shows a case where the depiction of an apple deviates from how apples usually appear in reality (assuming that blue apples do not exist). In other words, it is incongruent with the reality it represents, as it violates one of the features of what a typical apple looks like, namely its color. Color typicality is discussed more in depth in Chapters 2 and 3, and for example in Naor-Raz, Tarr, & Kersten, 2003; Ostergaard and Davidoff, 1985; Price and Humphreys, 1989; Tanaka and Presnell, 1999; Tanaka, Weiskopf, and Williams, 2001; Therriault, Yaxley, and Zwaan, 2009; and Vernon and Lloyd-Jones, 2003.

Figure 1.1 Two apples.

Notes (A) A photographic picture of a blue apple, (B) A schematic drawing of an apple.

The drawing of an apple in Figure 1.1b also deviates from how apples usually appear in reality, but in a different way than Figure 1.1a. The schematic picture in Figure 1.1b leaves out some details of what an apple looks like (such as color and texture), as it is a schematic picture of an apple. In addition, clear lines and contrasts are used to highlight certain characteristics, such as the apple’s outline and stem. Schematization of pictures is further discussed in Chapters 4 and 5, and for example in Butcher, 2006; Dwyer, 1976; Goldstone and Son, 2005; Scheiter, Gerjets, Huk, Imhof, & Kammerer, 2009; Schwartz, 1995; and Tatler and Melcher, 2007.

Many of the pictures that we come across in everyday (visual) communication are incongruent with reality, or present a schematic form of what they represent. The pictures in Figure 1.2 show some (familiar) examples. From left to right, it shows a still from the “pink elephants on parade” scene in Disney’s Dumbo movie, screen shots from mapping software on a mobile device, an expository picture of the anatomy of the human eye, and an overview of one of the stages in a cycling race. Note how all these pictures deviate from reality: Elephants are not pink but grey, the map shows an abstract version of the vicinity of Tilburg University, the eye anatomy picture does not resemble what an actual eye looks like, and cyclists get a different view of the climbs and descents than what the schematic overview of the stage looks like.

Figure 1.2 Figure 1.2 Some examples of incongruent and schematic pictures in practice.

Notes (A) Pink elephants on parade, (B) Map variations on a mobile device, (C) Anatomy of the eye, (D) Pro le of a stage in a cycling race.

This dissertation asks questions about how such deviating pictures function, and how they are processed by the people perceiving them. Are they processed differently than more congruent or realistic pictures? Are deviating pictures in any way beneficial? Would people remember such pictures better? Would it affect how people describe them? Would it help them to understand a phenomenon better if a picture is not realistic? Gaining more insight into potential influences of visual realism on processing and understanding may be relevant for a variety of scientific disciplines and methodologies, and could be interesting for a range of practical applications, such as advertising, navigation, educational technology, and information design.

Processing and understanding pictures is essential in visual communication: For such communication to be effective, a visually conveyed message needs to be understood by its receiver, which is a matter of cognitive processing in the receiver’s mind (e.g., Hegarty, 2011; Tversky, 2011). The focus in this dissertation is thus on the cognitive processing and understanding of pictures (rather than on their production). Considering common theories on the processing of pictures, for example in the areas of visual cognition (e.g., Pinker, 1984), object recognition (e.g., Biederman, 1987; Tanaka et al., 2001) and naming (Humphreys, Riddoch, & Quinlan, 1988), the understanding of pictures (e.g., Tversky, 2011), and of pictures in combination with expository text (e.g., Ainsworth, 2006; Mayer, 2005), an essential aspect of understanding a picture is the consultation or assessment of some sort of mental representation of what is depicted by that picture (i.e., prior knowledge). Assuming that such mental representations are based on prior experiences, viewing and processing deviating pictures should yield a certain confrontation or conflict between picture and prior knowledge.

Such differential processing may lead to several effects. In the literature, deviating pictures are for example found to be distinctive in memory (e.g., Gounden & Nicolas, 2012; McDaniel & Einstein, 1986), as pictures that deviate from reality are remembered better than more realistic pictures. In addition, deviating pictures of objects are described as atypical, since they are not alike the common or typical appearance of the object in reality. Atypical colors are for example found to slow down recognition of objects because color is an intrinsic property of some objects, and is used to identify these objects (e.g., Bramão, Reis, Petersson, & Faísca, 2011; Naor-Raz et al., 2003; Tanaka et al., 2001). Atypical colors also attract visual attention (Becker, Pashler, & Lubin, 2007), leading to effects of visual salience on for example language production (Mitchell, 2013). Concerning schematic pictures, schematized visualizations are sometimes found to improve learning and comprehension, which is explained in terms of schematic pictures not presenting learners with irrelevant visual information (e.g., Dwyer, 1976; Scheiter et al., 2009; but also see Imhof, Scheiter, & Gerjets, 2011; Joseph & Dwyer, 1984). Pictures that deviate from reality can be found to be not cognitively ‘natural’ (e.g., Hegarty, 2011), or otherwise less alike the assumed ‘cognitive template’ of reality.

Taken together, this leads to the hypothesis that deviations from visual realism influence cognitive processing. Recognizing, remembering, describing, and understanding deviating pictures may evoke different cognitive processes than high-fidelity realistic counterparts would.

In this dissertation, the influence of deviations from visual realism, in the form of color atypicality and schematization is explored, adopting a multidisciplinary approach. In a series of experimental studies, in which participants perceive and cognitively process pictures, effects of visual realism on different kinds of processing are explored and described. Chapter 2 covers effects of visual realism on how pictures of things are remembered. How such pictures are verbally referred to in definite descriptions is investigated in Chapter 3. Chapter 4 looks into a specific context in which people verbally describe visualizations: producing route descriptions from maps. Chapter 5 concerns pictures in an educational context: Textbooks and educational applications often use pictures to explain certain concepts and processes, and this chapter considers effects of realism in these pictures on learning. Chapter 6 commences from a more applied perspective, and explores how using visually realistic elements in an information display that depicts statistics of soccer games affects understanding of and appreciation for the display.

Visual realism is thus studied from various angles. The experimental studies in this dissertation are rooted in research into memory, language production, route descriptions, educational psychology, and information design. Each field encompasses experimental research in which people look at and process visual information, and effects of manipulations in this information are expected to be observed in the resulting behavior (e.g., Abu-Obeid, 1998; Clarke, Elsner, & Rohde, 2013; Dwyer, 1976; Hegarty, 2011; Hunt & Worthen, 2006). In other words, the experimental stimulus input consists of pictures, and the output of interest lies in several behavioral measures.

As this dissertation aspires to explore visual realism by studying it’s influence in different fields of study, each field is introduced in the respective chapters of this dissertation. Each chapter presents a theoretical framing that introduces visual realism in a particular field. How realism plays a role in these chapters will be further explained in the Overview below.

Table of contents

Overview and research questions

Before each Chapter is introduced in this overview, it should be noted that all studies in this dissertation (i.e., Chapters 2 to 6) are self-contained, in the sense that they have either been published in a peer-reviewed journal, have been submitted for publication, or are currently under review for such a journal. These individual studies (or parts thereof) have also been presented at one or more international conferences or workshops. Therefore, the chapters themselves provide more in-depth theoretical backgrounds and discussions for the investigated issues. The overview below is merely intended as a brief introduction into the research questions and main findings of each of the chapters. In each chapter, it is indicated on which conference paper(s) or journal paper the chapter is based.

This dissertation is structured on the basis of the two types of deviations from visual realism described above. Chapters 2 and 3 investigate effects of color atypicality, from two different perspectives. Chapters 4 and 5 focus on schematization, again from different perspectives. Finally, Chapter 6 takes a more applied perspective, and focuses on using realistic elements in information displays. Both the stimuli, and the human reactions towards visual realism, get increasingly complex throughout this dissertation, enabling the current work to cover a rich array of human reactions towards different aspects of visual realism in representational pictures.

Chapter 2 studies effects of visual realism on memory. The focus is on atypically colored pictures of objects (as in Figure 1.1a). In memory research, one research question concerns why people generally remember ‘strange’ or ‘different’ things better than common things (Hunt & Worthen, 2006). This effect has been found for words and sentences, as well as for representational pictures. These pictures vary in terms of congruity: Strange things are incongruent or atypical, as they deviate from reality. However, why people remember such stimuli better is an area of current investigation. The research question that is addressed is:

Why are incongruent pictures (atypically colored objects) remembered better than congruent pictures (typically colored objects)?

It has been proposed that one important factor in explaining the effect of atypicality on memory is processing time, but so far research into this explanation is inconclusive (e.g., Gounden & Nicolas, 2012). The findings reported in Chapter 2 support the processing time account, by showing that atypically colored pictures are processed longer than typical ones, and that this is associated with better memory for these pictures. These pictures are based on stimuli used in object recognition studies (e.g., Naor-Raz et al., 2003), depicting everyday objects in atypical colors, such as red bananas and yellow lobsters.

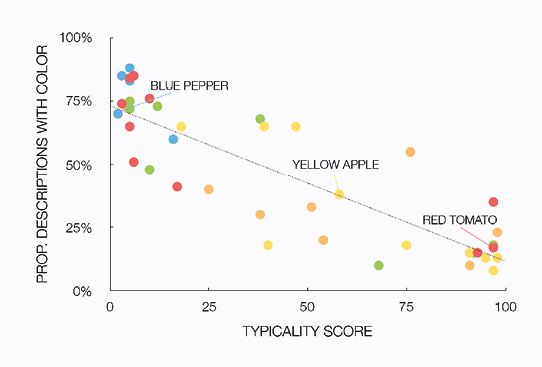

Chapter 3 studies verbal descriptions of atypically colored pictures. In research on language production, particularly on the production of referring expressions, the general focus is on how visual properties of objects and their environment affect the way people uniquely refer to these objects in definite descriptions such as “the blue apple” (e.g., Clarke et al., 2013; Coco & Keller, 2012; Dale & Reiter, 1995; Krahmer & Van Deemter, 2012). In Chapter 3, color atypicality is introduced as a factor in research on referring expressions, addressing the following research question:

Are incongruent pictures (atypically colored objects) described differently than congruent pictures (typically colored objects)?

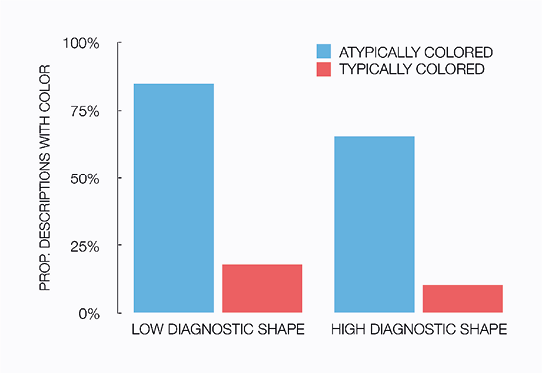

The results of the two language production experiments in this chapter show large effects of color typicality on referring expressions, as atypical colors lead people to mention these colors in their descriptions. This is attributed to cognitive salience: Atypical colors attract attention because they contrast with stored knowledge, and speakers are inclined to mention what is salient.

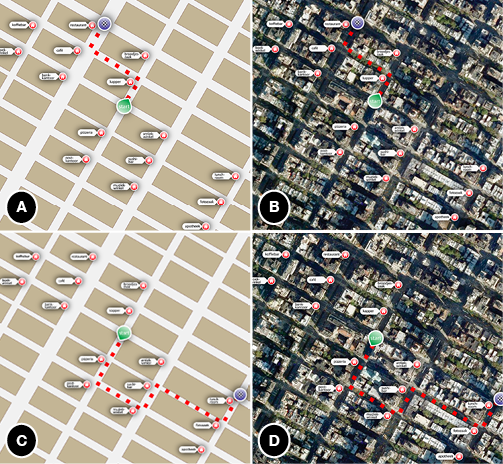

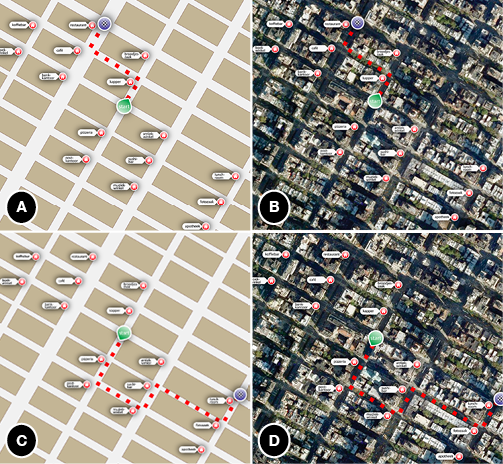

In Chapter 4, the focus is on how visual realism (here operationalized as visual detail, as in Figure 1.1b) in route maps may affect route descriptions. When people look at a map and describe how to go from one point to another (e.g., “go left at the shop and then take the first street on the right”), they produce verbal descriptions of visual information (e.g., Taylor & Tversky, 1992). Route maps can contain different degrees of visual detail (e.g., MacEachren, 2004; Timpf, 1999; and see Figure 1.2b), which is illustrated by mapping software available from for example Google, Apple, and Microsoft, which enable users to deliberately switch between detailed aerial photographs and simplified schematic maps. To investigate how visual detail in maps affects route descriptions, the research question addressed in this chapter is:

Are route descriptions that are based on realistic maps (aerial photographs) different from those based on schematic maps?

It is found that route descriptions are indeed different when people base them on schematic maps, compared to when they describe routes from detailed ones. These differences are related to both the form and the content of route descriptions: Descriptions of photographic maps are longer than descriptions of schematic maps, and the type of landmarks that are used to indicate where to change direction are different, depending on map type.

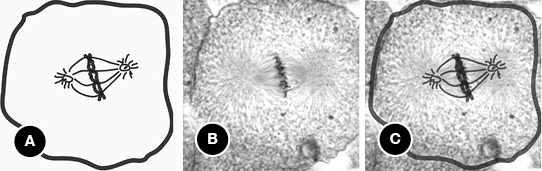

Chapter 5 investigates effects of visual detail on learning and comprehension. For several decades, educational psychologists have expressed an interest in the effects of visual detail in pictures that accompany written or spoken explanations (e.g., Butcher, 2006; Dwyer, 1968; Joseph & Dwyer, 1984; Mason, Pluchino, Tonatora, & Araisi, 2013; Scheiter et al., 2009). In textbooks and other educational materials, representational pictures are often used in combination with text to explain certain concepts, facts, and processes to students. Research in educational psychology has suggested that schematic line drawings support comprehension more effectively than detailed photographs do (e.g., Dwyer, 1968; Scheiter et al., 2009). However, it is unclear what explains this potential advantage of schematic drawings. Hence, the research question addressed in Chapter 5 is:

Why do students learn better from schematic pictures (line drawings) than from detailed pictures (microscopic photographs)?

Chapter 5 focuses on underlying processes or principles to which the potential advantage of schematic line drawings in educational materials can be attributed. It is found that the relative effectiveness of schematic pictures is not due to reduced visual detail compared to photographs, but due to the benefit of added visual emphasis. The findings in Chapter 5 support the idea that this visual emphasis helps students to identify key parts of the pictures, and make meaningful connections between text and pictures.

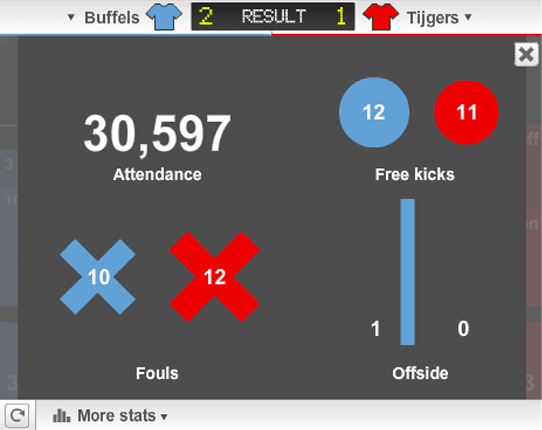

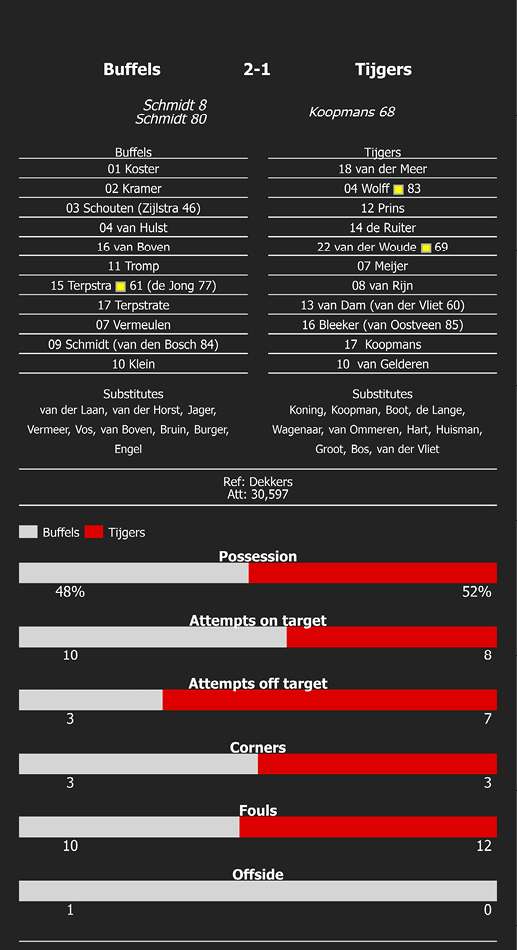

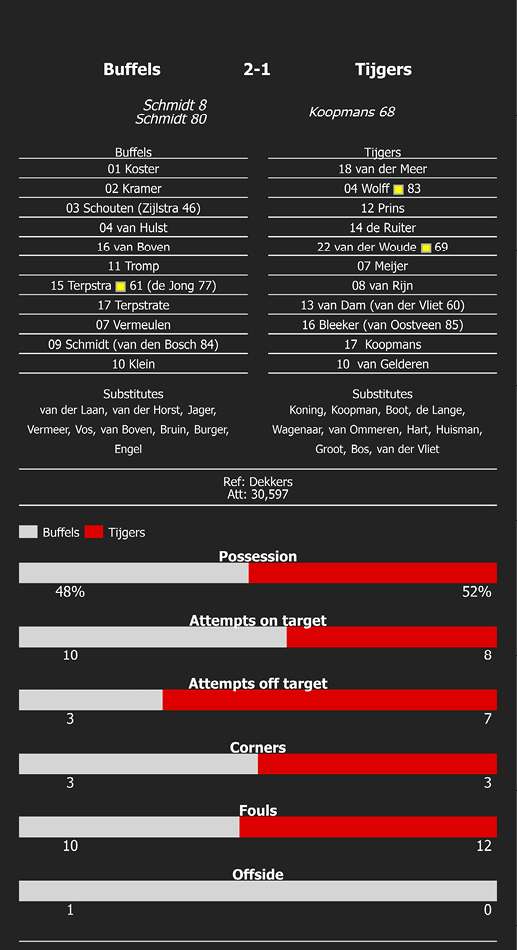

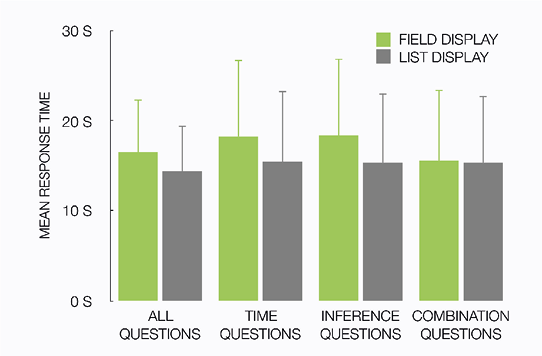

Chapter 6 presents a more practically applied example of visual realism, namely concerning information design. In the design of information displays, insights from perception and cognitive processing research lead to expectations about how they are best designed to facilitate optimal information extraction (e.g., Kessel & Tversky, 2011; Hegarty, 2011). One way to design such displays is to use realistic elements, for example by displaying soccer statistics on relevant parts of a soccer field (e.g., number of corners in the corners, number of goals in the goals). Although using realistic elements in information displays has been investigated by information designers for several decades (e.g., Bateman et al., 2010; Jansen, 2009; Neurath, 1974; Smallman & Cook, 2011), considering how visual realism in real-world information designs affects finding information yields new research questions (Hegarty, 2011). The question that is addressed in Chapter 6 is:

Does the use of visually realistic elements affect how people interpret and use an information display?

Chapter 6 aims to gain insight into effects of visually realistic elements in information displays by investigating whether theoretical design principles scale up to complex real-world information designs. Two real-world designs for summarizing soccer games are compared. Both designs were used by the BBC during the 2010 FIFA World Cup, and form an interesting case for studying the effectiveness of visually realistic elements in information design. While the realistic elements in one of the displays are theoretically beneficial for finding and understanding information, a large scale evaluation among more than five hundred participants shows that this display is actually less effective in several respects, such as finding data and drawing inferences. Also, the display that does not contain such realistic elements, is in several ways preferred by its users.

Chapter 7 summarizes the most important findings and conclusions of the foregoing chapters, reflects on overarching findings and themes, and discusses methodological implications of the current work. It also presents some considerations for practical applications.

In summary, the studies in this dissertation investigate various influences of visual realism on various cognitive processes. These chapters do so in a tradition and with dependent measures appropriate for the scientific field the chapter is situated in. Table 1.1 outlines the fields of research, experimental conditions, and dependent measures in each chapter.

Table 1.1 Overview of studies, conditions, and variables in this dissertation.

|

|

Field of research |

Experimental conditions |

Measure(s) |

| Chapter 2

Naming and remembering typically and atypically colored objects |

|

Memory |

Typically colored objects

Atypically colored objects |

Naming latency

Recognition, free recall |

| Chapter 3

Describing typically and atypically colored objects |

|

Language production:

Referring expressions |

Typically colored objects

Atypically colored objects |

Use of color adjectives |

| Chapter 4

Describing routes from schematic and realistic maps |

|

Language production:

Route desciptions |

Aerial photographs

Schematic maps |

Type of landmarks used

Descriptive accuracy

Descriptive efficiency |

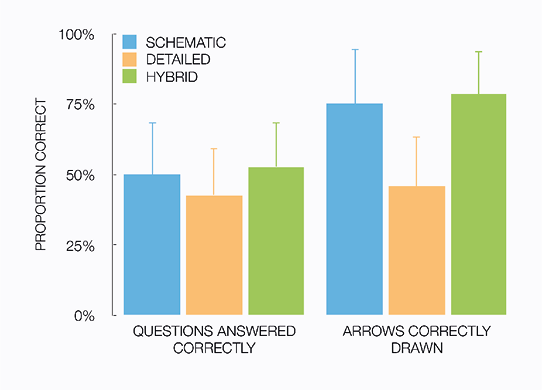

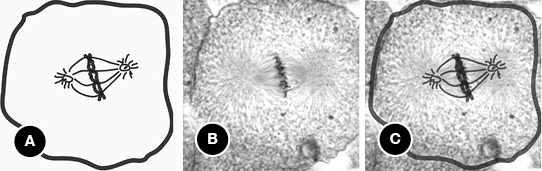

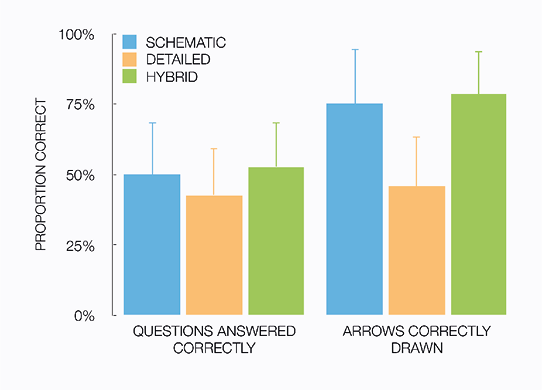

| Chapter 5

Learning with schematic, realistic, and hybrid pictures |

|

Learning and comprehension:

Instructional design |

Microscopic photographs

Schematic pictures

Hybrid pictures |

Subjective evaluation

Comprehension

Accuracy of text-picture connections |

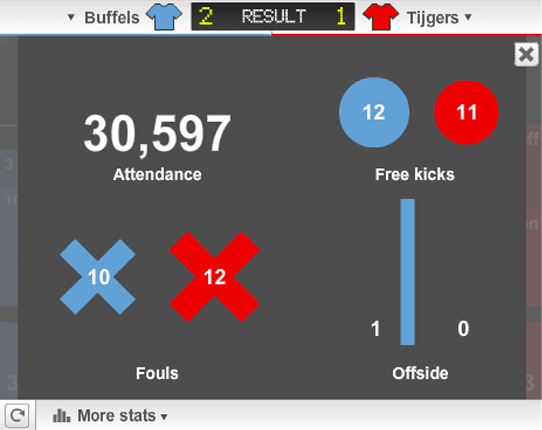

| Chapter 6

Understanding a visually rich information display |

|

Information design |

Visually rich display

Visually simple display |

Search time

Subjective evaluation |

Table of contents

Some remarks on differences between studies

Each study in this dissertation is situated in a different field of psychology and/or communication sciences. This means that the scientific literature is to a large degree unique for each individual chapter, and that there may be some differences in terminology. Most notably, the term realism is rarely used in Chapters 2 through 6, because each field of research has its own terminology to refer to differences in realism. In the Overview above, the terms color atypicality and schematization are used to refer to deviations from visual realism, which reflects the terminology in most chapters.

Additionally, each field of study involves its own traditions in methodology and statistical tests for experimental research. In the chapters that comprise this dissertation, it is intended to follow these traditions, conventions, and best practices closely. Therefore, each study makes use of the techniques that are adequate in each respective field of study, for the type of data that each experimental design yields. This dissertation contains quantitative experimental research in the lab, in classroom settings, and online, and it includes response time analyses, accuracy scores, verbal protocol analyses, quantified subjective evaluations, and basic eye tracking techniques. The statistical analyses deployed range from analysis of variance in between, within, and mixed designs, F1 and F2 analyses, correlation, linear regression, to (logit) mixed modeling (e.g., Barr, Levy, Scheepers, & Tily, 2013; Jaeger, 2008).

This dissertation comprises an extensive and omnifarious overview of influences of visual realism on cognitive processing and human communication, and thus takes up a multidisciplinary approach. The theoretical, terminological, and methodological differences between the studies in this dissertation reflect this multidisciplinary approach.

Table of contents

References

Abu-Obeid, N. (1998). Abstract and scenographic memory: The effect of environmental form on wayfinding. Journal of Environmental Psychology, 18(2), 159—173.

Ainsworth, S. (2006). DeFT: A conceptual framework for considering learning with multiple representations. Learning and Instruction, 16(3), 183—198.

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68(3), 255—278.

Bateman, S., Mandryk, R. L., Gutwin, C., Genest, A., McDine, D., & Brooks, C. (2010). Useful junk? The effects of visual embellishment on comprehension and memorability of charts. In Proceedings of the 28th international conference on Human factors in Computing Systems, Atlanta, GA.

Becker, M. W., Pashler, H., & Lubin, J. (2007). Object-intrinsic oddities draw early saccades. Journal of Experimental Psychology: Human Perception and Performance, 33(1), 20—30.

Biederman, I. (1987). Recognition-by-components: A theory of human image understanding. Psychological Review, 94(2), 115—147.

Bramão, I., Reis, A., Petersson, K. M., & Faísca, L. (2011). The role of color information on object recognition: A review and meta-analysis. Acta Psychologica, 138(1), 244—253.

Butcher, K. R. (2006). Learning from text with diagrams: Promoting mental model development and inference generation. Journal of Educational Psychology, 98(1), 182—197.

Carney, R. N. & Levin, J. R. (2002). Pictorial illustrations still improve students’ learning from text. Educational Psychology Review, 14(1), 5—26.

Clarke, A. D., Elsner, M., & Rohde, H. (2013). Where’s Wally: The influence of visual salience on referring expression generation. Frontiers in Psychology, 4: 329.

Coco, M. I. & Keller, F. (2012). Scan patterns predict sentence production in the cross-modal processing of visual scenes. Cognitive Science, 36(7), 1204—1223.

Dale, R. & Reiter, E. (1995). Computational interpretations of the Gricean maxims in the generation of referring expressions. Cognitive Science, 19(2), 233—263.

Dwyer, F. M. (1976). Adapting media attributes for effective learning. Educational Technology, 16(8), 7—13.

Dwyer, F. M. (1968). Effect of varying amount of realistic detail in visual illustrations designed to complement programmed instruction. Perceptual and Motor Skills, 27(2), 351—354.

Goldstone, R. L. & Son, J. Y. (2005). The transfer of scientific principles using concrete and idealized simulations. The Journal of the Learning Sciences, 14(1), 69—110.

Gounden, Y. & Nicolas, S. (2012). The impact of processing time on the bizarreness and orthographic distinctiveness effects. Scandinavian Journal of Psychology, 53(4), 287—294.

Hegarty, M. (2011). The cognitive science of visual-spatial displays: Implications for design. Topics in Cognitive Science, 3(3), 446—474.

Humphreys, G. W., Riddoch, M. J., & Quinlan, P. T. (1988). Cascade processes in picture identification. Cognitive Neuropsychology, 5(1), 67—104.

Hunt, R. R. & Worthen, J. B. (2006). Distinctiveness and memory. Oxford: Oxford University Press.

Imhof, B., Scheiter, K., & Gerjets, P. (2011). Learning about locomotion patterns from visualizations: Effects of presentation format and realism. Computers & Education, 57(3), 1961—1970.

Jaeger, T. F. (2008). Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. Journal of Memory and Language, 59(4), 434—446.

Jansen, W. (2009). Neurath, Arntz and ISOTYPE: The legacy in art, design and statistics. Journal of Design History, 22(3), 227—242.

Joseph, J. H. & Dwyer, F. M. (1984). The effects of prior knowledge, presentation mode, and visual realism on student achievement. The Journal of Experimental Education, 52(2), 110—121.

Kessell, A. & Tversky, B. (2011). Visualizing Space, Time, And Agents: Production, Performance, And Preference. Cognitive Processing, 12(1), 43—52.

Krahmer, E. & Van Deemter, K. (2012). Computational generation of referring expressions: A survey. Computational Linguistics, 38(1), 173—218.

MacEachren, A. M. (2004). How maps work: Representation, visualization, and design. New York: The Guildford Press.

Mason, L., Pluchino, P., Tornatora, M. C., & Ariasi, N. (2013). An eye-tracking study of learning from science text with concrete and abstract illustrations. The Journal of Experimental Education, 81(3), 356—384.

Mayer, R. E. (2005). The Cambridge handbook of multimedia learning. Cambridge: Cambridge University press.

Mitchell, M. (2013). Generating reference to visible objects. Ph.D. dissertation, University of Aberdeen.

Naor-Raz, G., Tarr, M. J., & Kersten, D. (2003). Is color an intrinsic property of object representation? Perception, 32(6), 667—680.

Neurath, M. (1974). Isotype. Instructional Science, 3(2), 127—150.

Ostergaard, A. L. & Davidoff, J. B. (1985). Some effects of color on naming and recognition of objects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 11(3), 579—587.

Pettersson, R. (1998). Image functions in information design. In Proceedings of the 30th annual conference of the International Visual Literacy Association (IVLA), Athens, GA.

Pettersson, R. (2013). Image design. Tullinge, Sweden: Rune Pettersson.

Pierroutsakos, S. L. & DeLoache, J. S. (2003). Infants’ manual exploration of pictorial objects varying in realism. Infancy, 4(1), 141—156.

Pinker, S. (1984). Visual cognition: An introduction. Cognition, 18(1), 1—63.

Price, C. J. & Humphreys, G. W. (1989). The effects of surface detail on object categorization and naming. The Quarterly Journal of Experimental Psychology A: Human Experimental Psychology, 41(4A), 797—827.

Rieber, L. P. (2000). Computers, graphics, & learning. Hull, GA: Lloyd P. Rieber.

Scheiter, K., Gerjets, P., Huk, T., Imhof, B., & Kammerer, Y. (2009). The effects of realism in learning with dynamic visualizations. Learning and Instruction, 19(6), 481—494.

Schwartz, D. L. (1995). Reasoning about the referent of a picture versus reasoning about the picture as the referent: An effect of visual realism. Memory & Cognition, 23(6), 709—722.

Smallman, H. S. & Cook, M. B. (2011). Naïve realism: Folk fallacies in the design and use of visual displays. Topics in Cognitive Science, 3(3), 579—608.

Tanaka, J., Weiskopf, D., & Williams, P. (2001). The role of color in high-level vision. Trends in Cognitive Sciences, 5(5), 211—215.

Tanaka, J. & Presnell, L. (1999). Color diagnosticity in object recognition. Perception and Psychophysics, 2(6), 1140—1153.

Tatler, B. W. & Melcher, D. (2007). Pictures in mind: Initial encoding of object properties varies with the realism of the scene stimulus. Perception, 36(12), 1715—1729.

Taylor, H. A. & Tversky, B. (1992). Descriptions and depictions of environments. Memory & Cognition, 20(5), 483—496.

Therriault, D., Yaxley, R., & Zwaan, R. (2009). The role of color diagnosticity in object recognition and representation. Cognitive Processing, 10(4), 335—342.

Tversky, B. (2001). Spatial schemas in depictions. In M. Gattis (Ed.), Spatial Schemas And Abstract Thought (pp. 79—111). Cambridge: MIT Press.

Tversky, B. (2011). Visualizing thought. Topics in Cognitive Science, 3(3), 499—535.

Vernon, D. & Lloyd-Jones, T. J. (2003). The role of colour in implicit and explicit memory performance. The Quarterly Journal of Experimental Psychology, 56(5), 779—802.

Table of contents

Chapter 2

Naming and remembering typically and atypically colored objects

This chapter is based on:

Westerbeek, H., Van Amelsvoort, M., Maes, A., & Swerts, M. (in preparation). Naming and remembering atypically colored objects: Support for the processing time account for a bizarreness effect.

An earlier version of this work has been presented in:

Westerbeek, H., Van Amelsvoort, M., Maes, A., & Swerts, M. (2014). Naming and remembering atypically colored objects: Support for the processing time account for a secondary distinctiveness effect. In Proceedings of the 36th annual meeting of the Cognitive Science Society (CogSci), Quebec City, Canada.

Abstract The bizarreness effect is the effect that stimuli that are distinctive or different from stored knowledge are remembered better than common stimuli. We combine methodology from object recognition with memory tasks to investigate the processing time explanation for this effect, i.e., that distinctive stimuli are remembered better because they are more deeply processed and thus take more processing time during encoding. Participants in our experiment named common and distinctive items (typically and atypically colored objects), and memory was tested in recognition tasks. Our results replicate the bizarreness effect, as recognition scores were higher for atypically colored objects than for typical ones. Crucially, analyses of response times in the naming task showed that participants need significantly more time to process atypically colored objects. Also, longer response latencies in the naming task predicted better recognition, such that an increase in processing time caused by color atypicality was associated with an increase in memorability for atypically colored objects. Our results support the processing time hypothesis for the bizarreness effect. However, in a follow-up experiment we found that the effect diminishes when the recognition task is replaced by free recall. We interpret these findings as indicating that processing time during encoding plays a role in the bizarreness effect for atypically colored objects, but it does not reliably predict it.

Introduction

A recurring finding in experimental psychology is that items that are unusual or distinctive are remembered better than common items (e.g., Hunt & Worthen, 2006). This distinctiveness effect remains a field of investigation in current experimental psychology (e.g., McDaniel & Bugg, 2008; Michelon, Snyder, Buckner, McAvoy, & Zacks, 2003). There have been attempts to explain this effect in terms of differences in processing during encoding: Better memory for distinctive stimuli is associated with more attention and thus more processing time during encoding (e.g., Gounden & Nicolas, 2012; Kline & Groninger, 1991; McDaniel & Einstein, 1986). However, research aimed to test this explanation has been inconclusive. As we will argue below, this could partly be due to the way processing time has been operationalized and analyzed in previous studies. Also, we argue that the choice of stimuli to manipulate common and distinctive items could allow for alternative explanations. In the current chapter, we address these two potential problems, in order to investigate whether processing time is an explanatory variable for the secondary distinctiveness effect.

The secondary distinctiveness effect is the effect of better memory for items that are incongruent with general knowledge and expectations based on experiences with the real world (e.g., Schmidt, 1985, 1991). A specific secondary distinctiveness effect is the bizarreness effect: The effect that stimuli that show or describe something that is very unlikely are found to be more memorable than common stimuli. For example, a sentence like “the dog rode the bicycle down the street” (McDaniel & Einstein, 1986) is found to be remembered better than the non-distinctive equivalent “the dog chased the bicycle down the street”. In other studies, participants were presented with pictures instead of sentences, to exert more control over potential effects of reading and comprehension processes (e.g., Gounden & Nicolas, 2012). Secondary distinctive pictures show objects that are unlikely to be found in reality, such as a an office chair with human legs (Michelon et al., 2003), or a giraffe with two heads (Gounden & Nicolas, 2012). Alike the sentences, such pictures are found to be more memorable than pictures of common objects. Because such sentences and pictures can easily be regarded as strange, this particular secondary distinctiveness effect is called a bizarreness effect (e.g., McDaniel & Bugg, 2008).

The bizarreness effect has been examined using a wide variety of research designs and stimulus materials, in order to explore the conditions under which it occurs (e.g., Gounden & Nicolas, 2012; Graesser, Woll, Kowalski, & Smith, 1980; Nicolas & Marchal, 1998; O’Brien & Wolford, 1982). For example, both sentences and pictures are found to demonstrate the effect. Research designs also differentiate between whether memory is implicitly or explicitly tested, (Nicolas & Marchal, 1998). Designs also differ in how memory is tested (e.g., Gounden & Nicolas, 2012; Graesser et al., 1980). A particularly influential variable is the time span between learning and testing (e.g., O’Brien & Wolford, 1982): The bizarreness effect typically occurs when there is a sufficient delay of about two weeks between encoding and testing (e.g., McDaniel & Einstein, 1986; Michelon et al., 2003), which suggests that both common and distinctive items are remembered initially, but distinctive items are remembered longer than the common ones.

Explanations for the memory advantage for secondary distinctive items have been proposed in terms of differences in how these items and common ones are encoded into memory. Such encoding-based explanations propose that secondary distinctive stimuli are encoded differently than common ones (e.g., Kline & Groninger, 1991; McDaniel, Dornburg, & Guynn, 2005), as the distinctive nature of the stimuli attracts attention to what sets these items apart from what is considered normal or more common. One particularly appealing explanation for the effect that has received scholarly attention is the processing time hypothesis (e.g., Gounden & Nicolas, 2012; Kline & Groninger, 1991; McDaniel & Einstein, 1986). According to this account, distinctive items attract more attention than common ones during learning, and as a consequence more time is spent on the distinctive items. This longer and potentially stronger encoding then leads to superior memory for these stimuli.

While the processing time account is very intuitive, previous studies have not found consistent evidence to support this hypothesis. To test whether processing time during encoding explains the differences in memory for bizarre items, McDaniel and Einstein (1986) presented sentences describing common or bizarre relations between nouns to participants for either 7 or 14 seconds. Through a yes/no recognition task, McDaniel and Einstein measured memory for these items. They report that more nouns from bizarre sentences were recognized correctly than nouns from common sentences, but this effect was not modulated by the different presentation times. The authors report that, in a prior task, common sentences were processed in approximately 7 seconds. So, they reason, when 7 seconds were given to study both common and bizarre sentences, participants would not be able to spend the additional processing time on the bizarre sentences required to obtain an advantage in memory. However, because even at a 7 second presentation rate the nouns from bizarre sentences were recognized better than the nouns from common sentences, McDaniel and Einstein conclude that the mnemonic benefits of bizarreness are not related to increased processing time for such items.

When presentation time of distinctive and common items is manipulated, determining the relevant presentation time windows is crucial. Where McDaniel and Einstein (1986) based their presentation windows for sentences on mean processing times in previous research, Gounden and Nicolas (2012) reason that this method still yields a rather indirect manipulation. Therefore, they used images instead of sentences, taking additional processes involved in reading, comprehending and imagining the meaning of sentences out of the equation. These images were drawings of single objects (e.g., a horse), and incongruous versions of these drawings were created by multiplying salient features of these objects (e.g., a horse with three heads). To observe a potential role of processing time in the secondary distinctiveness effect, the images were presented to participants for either 500, 1000, or 3000 milliseconds. An expected interaction between distinctiveness and presentation time was not found: The incongruous objects were recalled better than the common ones in every presentation time condition. These results seem to suggest that processing time is not related to the secondary distinctiveness effect.

Kline and Groninger (1991) did find an interaction between presentation time and bizarreness. They presented sentences similar to those used by McDaniel and Einstein (1986; 1989) for 3, 5, 7, 11, 15, or 20 seconds, and report a memory effect for some of these time windows, but only when the sentences were relatively complex. However, the direction of the effect is unclear, as common sentences lead to better memory with a presentation time of 11 seconds, the effect reversed at 15 seconds, and no difference was found with a 20 second presentation time window. Therefore, bizarre items were not generally found to be processed longer than common items, and thus a conclusion that longer processing time for distinctive items accounts for the bizarreness effect cannot be based on the data.

In the remainder of this chapter, we discuss two methodological aspects of these studies that may have obscured potential effects of differences in processing time between common and distinctive stimuli: the manipulations of presentation time and the nature of the stimuli used. We argue that, if these methodological aspects are reconsidered, encoding-based explanations for the bizarreness effect may not need to be discarded.

In the studies discussed above, presentation time was manipulated to investigate a potential modulating role of processing time on the bizarreness effect. However, presentation time is not necessarily the same as processing time, and we reason that manipulations of presentation time make it difficult to ascribe secondary distinctiveness effects to differences in processing time. This is not only because presentation time and processing time are not necessarily the same, but also because one cannot know how quickly common and distinctive items are processed. Presentation times in experiments can be too short to obtain the ‘necessary’ encoding time for distinctive items. They can also be too long, such that distinctive items that are potentially harder to process get sufficient processing time anyway, nullifying a potential modulation of the memory effect. Moreover, processing time is likely to vastly differ between different kinds of stimuli.

In contrast, Michelon et al. (2003) investigated an encoding-based explanation for the bizarreness effect without manipulating presentation time, using event related functional magnetic resonance imaging (fMRI) instead. They presented pictorial stimuli to participants, all for 2.8 seconds. As such, Michelon et al. kept presentation times constant throughout their experiment, and measured cortical activity to study whether processing was different for common or incongruous pictures. Michelon et al. report that the incongruous pictures were remembered better than the common ones. Also, their analysis of cortical activation supports encoding-based accounts for the effect as signal increases were greater for distinctive versus common stimuli in several cortical areas. So, Michelon et al. attribute the memory effect to more elaborate processing, and they managed to avoid potential problems with presentation times.

The nature of the stimuli used by Michelon et al. potentially allows for alternative explanations, however. Their common pictures showed familiar objects, such as a teapot. Pictures in the incongruous condition were so-called chimeric objects, comprising of parts of two different objects fused into one, such as a key and a snake. Such pictorial stimuli however do not always yield minimal pairs in distinctiveness research, while the sentences for example used by McDaniel and Einstein (1986) and Kline and Groninger (1991) contain the same amount of words, nouns, and adjectives, irrespective of distinctiveness.

One could argue that using non-minimal pairs increases processing demands during encoding, as in the case of chimeric objects that comprise of (parts of) multiple objects. In such cases two objects are recognized, plus their spatial relationship with respect to each other. This is reflected by Michelon et al.’s finding that these objects elicit activation in both the ventral and the dorsal visual pathway. The dorsal pathway is often said to be associated with processing of spatial relations between objects (e.g., Landau & Jackendoff, 1993). So, the increase in overall cortical activity for chimeric objects may be explained by both their distinctiveness and by the fact that they comprise multiple objects. This problem also likely persists in other aforementioned studies: The objects with multiplied features of Gounden and Nicolas (2012) presented participants with more (visual) cues than the common objects. As a result, it is not immediately clear whether the memory advantage for chimeric or otherwise more complex objects is due to more elaborate processing, or to the fact that these stimuli were more complex and therefore contained more features, so that observers could possibly rely on more cues when retrieving them from memory.

We argue that if the methodological issues concerning presentation time and the nature of the stimuli used that we discussed above are addressed, this warrants a new investigation into the processing time account of the bizarreness effect. If we can present people with items that are secondary distinctive, and which are processed less quickly than common counterparts, we can measure this processing time difference and test whether the increased processing time of distinctive items predicts better memory for these items compared to common items. Furthermore, these distinctive items should not contain additional (visual) features compared to common items. The field of object recognition provides us with stimuli that meet both these criteria.

Studies in object recognition provide evidence that pictures of distinctive objects require more time to be processed than common equivalents. It is well established that pictures of objects that have an atypical color (for example a red banana) are less quickly processed (i.e., recognized and named) than pictures of typically colored objects (e.g., Naor-Raz, Tarr, & Kesten, 2003; Tanaka, Weiskopf, & Williams, 2001; Therriault, Yaxley, & Zwaan, 2009). For example, Therriault et al. (2009) report significantly slower responses for atypically colored objects compared to typically colored ones on naming and verification tasks, as well as on reading times for sentences where nouns are replaced by atypical pictures.

Atypically colored objects are secondary distinctive: They are − like bizarre, incongruous, or chimeric objects − unusual compared to stored knowledge, which contains information about the default color of an object (Bramão, Reis, Petersson, & Faísca, 2011; Naor-Raz et al., 2003). For example, a picture of a red banana contrasts with stored knowledge, which states that bananas are usually yellow. Additionally, typically and atypically colored objects are minimal pairs: They only differ in terms of one property (color) that has a different value. This minimizes potential confounds introduced by non-minimal pairs. Object recognition studies show that processing such atypically colored objects takes more time, but we do not yet know whether this influences memory. In this chapter, we thus combine object recognition with memory tasks.

Table of contents

We want to investigate the processing time hypothesis as an explanation for the bizarreness effect, and we take an interdisciplinary approach by combining methodology from object recognition with procedures from memory research. First, we administer a naming task with pictures of typically and atypically colored objects as encoding task, so we can measure processing time (i.e., naming latency) for common and distinctive items. Consecutively, memory is tested in yes/no recognition tests (in Experiment 1) and in a free recall task (in Experiment 2). This combination of naming and memory tasks allows us to investigate whether a difference in processing time predicts better memory for these items.

Table of contents

Experiment 1

Naming onto recognition

In this experiment, participants named typically and atypically colored everyday objects. They were not instructed about the successive memory tests, so our paradigm entails incidental learning (Nicolas & Marchal, 1998). Directly after naming, a yes/no recognition memory task was administered to test whether incidental learning was successful. The memory task was re-administered two weeks later.

Forty undergraduate students (all speakers of Dutch, thirty-two women and eight men, with a median age of 22 years) participated for course credit. They were not color blind, and all gave written consent for recording their voice and analyzing their data.

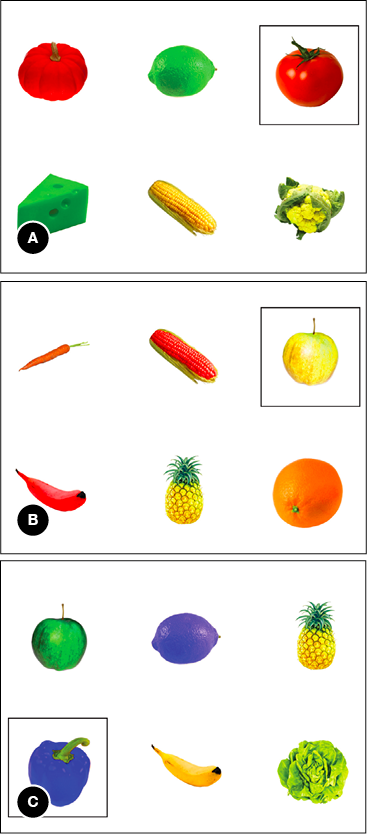

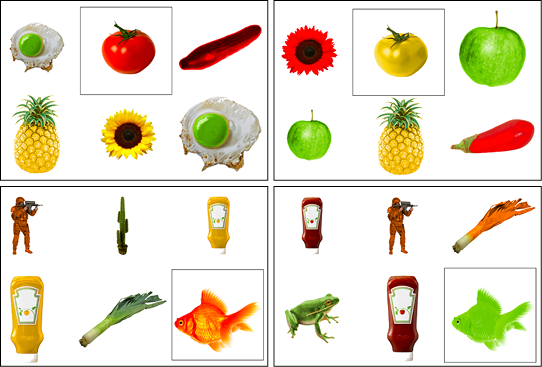

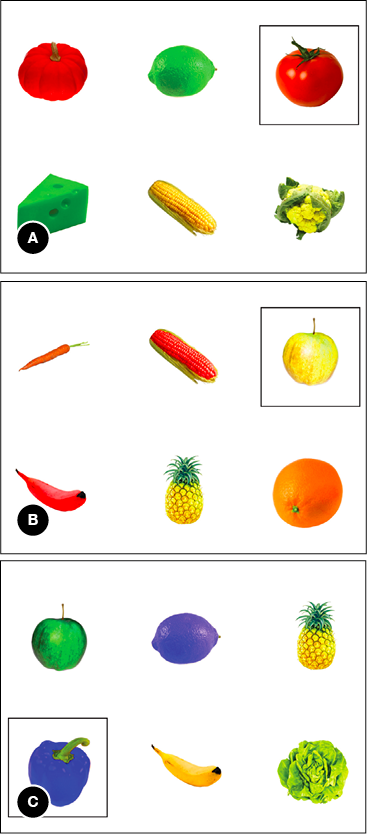

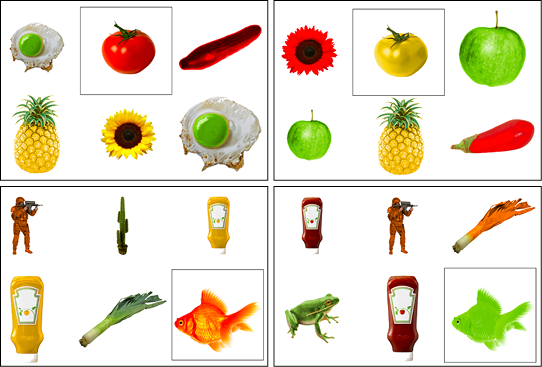

Seventy-six everyday objects were selected on the basis of stimuli used in object recognition studies (e.g., Naor-Raz et al., 2003; Therriault et al., 2009). Because atypically colored versions were to be created, these were all color-diagnostic objects (i.e., objects that have one or a few typical colors associated with them). For each object a high quality photo was selected and edited, such that the object was seen on a plain white background. For the atypically colored versions, further photo editing was done to change the objects’ color. Atypical colors were determined by rotating colors across the various objects, such that the number of objects in each color (red, blue, yellow, orange, green, brown, and pink) was the same in both typicality conditions. We did this to control for any effect of particular colors (hues and luminosities) on naming and recognition, which may confound our manipulation of typicality. Figure 2.1 presents some examples of objects in typical and atypical colors, as we used them in the experiment.

Figure 2.1 Some examples of typically and atypically colored objects, as used in experiments 1 and 2.

The seventy-six objects were equally distributed over two lists. In each list of thirty-eight objects, half of the objects was typically colored, and the other half was atypical. We ensured that an object never appeared in more than one color within each list. Of both lists, a second version was assembled in which color typicality was reversed: Objects that were typically colored in one version were atypical in the other and vice versa. This resulted in two versions of two lists of thirty-eight objects.

The lists were matched for color frequency, whether the objects are easily named (nameability), whether the typically colored pictures matched mental prototypes (prototypicality), how frequent the object’s name is in the language (Dutch), the length of the name in syllables, and the luminosity (i.e., brightness) of the pictures. We also made sure that luminosity was not different for typical and atypical objects within each list. Name frequencies were assessed using an on-line corpus (Keuleers, Brysbaert, & New, 2010). Luminosity was measured using MATLAB (Mathworks, Natick, MA).

Nameability and prototypicality of the typically colored objects were determined in pretests. Nameability was determined by asking ten participants to name both typically and atypically colored objects. Two lists of stimuli were created for this pretest such that they named each object in only one of the two color conditions. Accuracy rates were used to determine whether all objects in our stimulus set would be easily nameable. Whether the typically colored pictures matched mental prototypes was measured by means of an image agreement task (Snodgrass & Vanderwart, 1980): Seven different participants first read the name of an object (e.g., lion), and were instructed to imagine what this object would look like. Consecutively, they rated a picture of this object for how much it resembled what they imagined, on a five-point scale. These ratings were used to establish that the pictures of typically colored objects were found to be common exemplars (M = 4.30, SD = 0.55). None of the participants in the pretests were involved in the experiments reported in this chapter.

The experiment was performed in a dimly lit sound proof cabin, in order to minimize distraction. Participants were randomly assigned to one of the stimulus lists. They were instructed that they would get to see a number of pictures on a computer screen, and that they had to name these objects as quickly as possible. The instructions did not reveal that memory would be tested after the naming task. The objects appeared in a random order, one by one. The presentation time for each object was exactly 3000 ms, preceded by a fixation cross (800 ms) and followed by a blank screen (1000 ms). The first three items were filler objects, after which the thirty-eight stimulus objects were presented. The order of these stimuli was randomized for each participant.

Immediately after the naming task, the participants had to perform a second task. They were informed that the pictures from the first task would be shown once again, but that new objects would be mixed in. Participants had to say as quickly as possible (out loud) whether each object was part of the naming task (“yes”) or not (“no”). The new objects were the objects from the list that the participant did not see in the naming task (so, these were not previously seen in other colors). The order of the objects was randomized for each participant.

The participants were asked to return to the lab about two weeks later, but they were not instructed about the purpose of this second meeting. All participants returned to the lab and performed the yes/no recognition task again. Due to practical constraints, the delay between the tasks ranged from 11 to 18 days across participants (the median delay was 15 days, most participants returned after 14, 15 or 16 days). After this task, color blindness was assessed using the web-based CU Dynamic Colour Vision Test (Barbur, Harlow, & Plant, 1994).

Responses were recorded with a head-mounted microphone. Stimulus randomization, timing, and voice recording were administered using E-Prime (Schneider, Eschman, & Zuccolotto, 2012). Reaction times were measured by analyzing the audio recordings in Praat (Boersma & Weenink, 2012; Kaiser, 2013, p. 144).

For the naming task, we compared response times for typically and atypically colored objects in a within-participants design. For the recognition tasks, we compared hits, false alarms and corrected recognition scores (Pr) within participants. Response times and recognition data were analyzed using repeated measures ANOVAs, both on by-participants means (F1) and on by-item means (F2).

Despite the pretests, five of the seventy-six objects (blackberry, celery, pickle, red cabbage, sprout) yielded disproportionally high numbers of incorrect responses or non-responses, and were excluded (especially the atypically colored versions of these objects turned out to be problematic, as more than seventy percent of participants named the objects incorrectly or refrained from naming). So, all consecutive analyses are performed on the remaining seventy-one objects. Response times for incorrect responses and non-responses were discarded, removing 11.1 percent of the data. An outlier analysis on response times for correctly named objects was conducted, in which we removed response times that were faster than 500 ms or longer than 2500 ms. This outlier procedure resulted in discarding of 0.4 percent of the response times for correctly named objects, well within an acceptable range for response time data (Ratcliff, 1993).

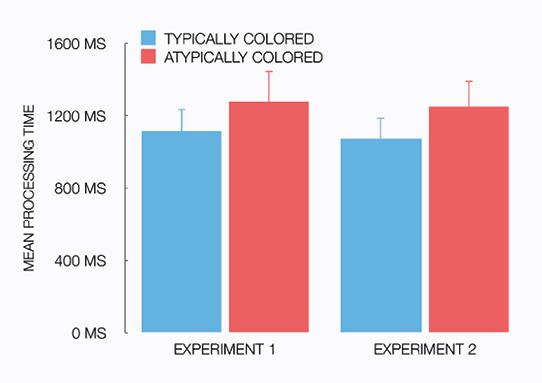

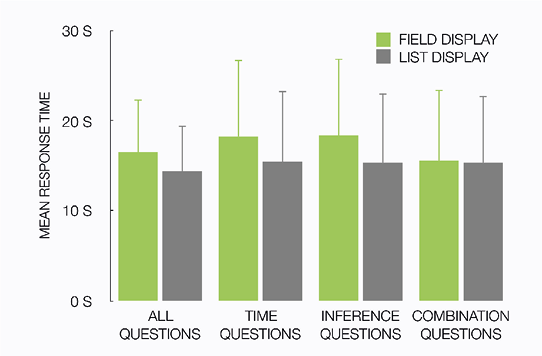

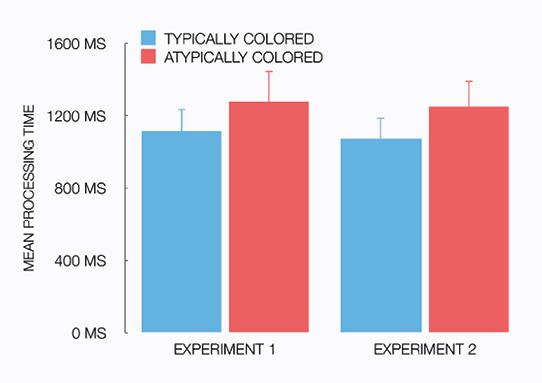

Analysis of the processing time in the naming task, shown in Figure 2.2 (left panel), revealed a main effect of color typicality: F1(1, 39) = 95.85, p < .001, ηp2 = .711; F2(1, 70) = 66.24, p < .001, ηp2 = .486. Typically colored objects were named significantly faster (M = 1,123 ms, SD = 123 ms) than atypically colored ones (M = 1,285 ms, SD = 162 ms). This result replicates previous research in object recognition (e.g., Tanaka et al., 2001; Therriault et al., 2009), and shows that secondary distinctive items are processed less quickly than common ones.

Figure 2.2 Mean processing time (in milliseconds) for atypically and typically colored objects in the naming tasks of experiments 1 and 2.

Notes Error bars are +1 standard deviation.

As is common practice in analyzing responses for recognition tasks, we corrected for response bias by calculating a corrected recognition score or discrimination index Pr (for a comprehensive discussion of measurements of recognition memory, see Snodgrass & Corwin, 1988). This recognition score corrects the percentage of hits (i.e., the participant saying that an object was seen when it actually was) for the percentage of false alarms (i.e., the participant saying that an object was seen while it actually was not), and is calculated as Phit − Pfalse alarm.

Results of the immediate recognition task showed no effects of color typicality on hits, false alarms, and on recognition scores; all p’s > .07. Performance was near perfect as hit rates and corrected recognition scores were both well above 95 percent. This confirmed that naming objects leads to successful encoding.

Results of the delayed recognition task are shown in Table 2.1. Analyses of hit rates revealed a main effect of color typicality, such that there were significantly more hits for atypically colored objects: F1(1, 39) = 35.85, p < .001, ηp2 = .479; F2(1, 70) = 27.89, p < .001 ηp2 = .285. A marginally significant effect in the same direction was found for false alarms: F1(1, 39) = 4.27, p = .046, ηp2 = .099; F2(1, 70) = 3.46, p = .067. Importantly, corrected recognition scores were higher for atypically colored objects than for typically colored ones: F1(1, 39) = 12.16, p = .001, ηp2 = .238; F2(1, 70) = 11.51, p = .001, ηp2 = .141.

Table 2.1 Delayed recognition results (in percentages) of Experiment 1.

Notes * p < .05, ** p < .005, *** p < .001. The indicated significance levels are based on F1-analyses.

|

Typically colored objects |

|

Atypically colored objects |

|

|

M |

(SD) |

|

M |

(SD) |

|

| Hits |

67.5 |

(16.6) |

|

82.8 |

(10.2) |

*** |

| False alarms |

20.9 |

(16.3) |

|

26.4 |

(15.4) |

* |

| Recognition score (Pr) |

46.7 |

(16.8) |

|

56.6 |

(15.3) |

** |

Initial analyses showed that the number of days between naming and delayed recognition did not affect hits, false alarms, and recognition scores; all p’s > .14. Delay was, therefore, not included as a factor in the analyses above. However, there was an interaction effect between delay and color typicality for hits, F(1, 38) = 6.23, p = .017, ηp2 = .141, which suggested that the effect of color typicality increased as a function of the number of days between naming and recognition.

These results show that those items that were processed longer in the naming task (i.e., the atypically colored objects) were also remembered better than items that were processed more quickly in the naming task (typically colored objects). To further explore this relationship between the results of the naming task and those of the recognition task, we carried out by-item linear regression analyses with naming latency as the predictor and corrected recognition scores as the outcome variable. A longer processing time in the naming task was associated with a higher recognition score in the delayed recognition task, β = .36, t(141) = 4.52, p < .001. A longer processing time predicted a higher recognition score, R2 = .13, F(1, 141) = 20.45, p < .001. Per color typicality condition, processing time and recognition score were similarly associated (typically colored objects: β = .24, t(70) = 2.08, p = .041; atypically colored objects: β = .35, t(70) = 3.10, p = .003). In both conditions, longer processing times predicted higher recognition scores (typical: R2 = .06, F(1, 70) = 4.32, p = .041; atypical: R2 = .12, F(1, 70) = 9.59, p = .003). Finally, the difference in processing time between typically and atypically colored objects in the naming task was associated with with the difference in memory score in delayed recognition, β = .24, t(70) = 2.08, p = .042, as a larger effect size in the naming task predicted a larger effect size in delayed recognition, R2 = .06, F(1, 70) = 4.30, p = .042. This shows that for objects for which processing time was virtually unaffected by color typicality, no secondary distinctiveness effect was found either. Conversely, for objects for which the color typicality manipulation yielded the largest effect on processing time, the memory effect was relatively large as well.

To our knowledge, we are the first to report that longer processing of atypically colored items is associated with better memory for these items, but to assess the robustness of the bizarreness effect found in this experiment we attempt to replicate our findings in a follow-up experiment. Because the yes/no recognition paradigm used in Experiment 1 is arguably relatively sensitive to the perceptual nature of our color typicality manipulation, in Experiment 2 we replaced recognition by free recall. In a free recall task, participants do not receive visual input that may serve as an extra cue that can be exploited to retrieve items from memory. So, by altering the conditions under which items are retrieved from memory, we can investigate whether our finding that longer processing of atypically colored items fully explains their advantage in a memory task, or alternatively, whether a different retrieval paradigm (i.e., without processing of visual input) may modulate the effect of processing time on memorability. This allows us to explore the robustness of the association between processing time and memory for distinctive objects.

Table of contents

Experiment 2

Naming onto free recall

Thirty-nine undergraduate students (all speakers of Dutch, thirty-one women and eight men, with a median age of 21 years) participated for course credit. As in Experiment 1, they were not instructed about the fact that their memory would be tested. None of these participants participated in Experiment 1 nor any of the pretests, and none were color blind. All gave written consent for recording their voice and analyzing their data.

The materials and procedure were identical to Experiment 1, except that instead of yes/no recognition tasks, a free recall task was administered. During free recall, the participants were asked to list as many items they had seen as possible (they were free to mention their colors as well). When the participant indicated that he or she could not remember any more items, the experimenter prompted once more, and in most cases this yielded a few more responses. The delay between the recall tasks ranged from 12 to 16 days across participants (the median delay was 14 days, most participants returned after 13, 14 or 15 days). As in Experiment 1, color blindness was assessed after this task.

Statistical analyses were identical to Experiment 1, except that for the free recall task the number of correctly recalled items was used as the dependent measure.

All consecutive analyses are performed on the same seventy-one stimulus objects as in Experiment 1. Response times for incorrect responses and non-responses were discarded, removing 9.4 percent of the data. The outlier procedure, which was identical to Experiment 1, resulted in discarding of 1.0 percent of the response times for correctly named objects. Analysis of the processing time in the naming task, shown in Figure 2.2 (right panel), revealed a main effect of color typicality: F1(1, 38) = 76.67, p < .001, ηp2 = .669; F2(1, 70) = 95.92, p < .001, ηp2 = .578. Typically colored objects were named significantly faster (M = 1,078 ms, SD = 111 ms) than atypically colored ones (M = 1,255 ms, SD = 148 ms). These results replicate our findings in Experiment 1, as well as findings in other object recognition studies.

Results of the immediate free recall task showed no effect of color typicality on the number of items recalled, as about an equal amount of typically colored (M = 6.9 objects, SD = 2.1 objects) and atypically colored objects (M = 6.5, SD = 2.7) were recalled: F1 < 1; F2(1, 70) = 1.33, p =.253. Analyses of the number of items recalled in delayed free recall also showed no effect of color typicality, as the same amount of typically (M = 3.4, SD = 1.7) and atypically colored objects (M = 3.4, SD = 2.3) were recalled: F’s < 1. Note that the number of items recalled in both immediate and delayed free recall is arguably rather low, given that the maximum number of recalled items was 19 in each of the typicality conditions. We also observed that the color of objects was hardly ever mentioned in the free recall tasks. Processing times for items in the naming task did not reliably predict the number of times these items were recalled (p’s > .17).

In contrast to our findings in Experiment 1, where we reported a robust effect of color typicality (secondary distinctiveness) on delayed yes/no recognition, no such effect was found in free recall. So, under different retrieval conditions, processing time does not reliably predict the memorability of secondary distinctive items, compared to common items. Specifically, when the retrieval task does not involve visual processing, objects that look different from stored knowledge (i.e., atypically colored objects) are not remembered better than objects that are more prototypical (typically colored ones).

Table of contents

General discussion

We investigated the processing time hypothesis of the bizarreness effect in two experiments in which participants named typically and atypically colored objects, followed by tests of memory for these objects. Atypically colored objects are secondary distinctive: They contrast with stored knowledge about everyday normal objects, and the bizarreness effect predicts that these objects are remembered better than (non-contrasting) typically colored objects. Also, typically and atypically colored objects are minimal pairs, as atypically colored objects do not present people with more (visual) cues than typically colored ones. To our knowledge, our study is the first to investigate the processing time account for the bizarreness effect by measuring (differential) processing times for common and distinctive items and correlating those to memory effects, instead of through an experimenter-controlled manipulation of presentation time.

In Experiment 1, we combined an object naming task with a yes/no recognition memory task. In the naming task, we found that when the color of an object is atypical (e.g., red banana), the object is recognized less quickly than when its color is typical (e.g., red strawberry), replicating results found in object recognition studies (e.g., Therriault et al., 2009). A recognition task that was administered two weeks later produced a bizarreness effect: Atypically colored objects were remembered better than typically colored ones. We thus found that items that received more processing time in encoding are associated with better recognition during the delayed memory test. These results are taken to support a processing time explanation for the bizarreness effect. To our knowledge, we are the first to report a direct association between longer processing times in encoding and better retrieval in the memory task.

We focused on the processing time hypothesis, which is an encoding-based account for the bizarreness effect: Distinctive items are processed longer than common ones during encoding, and are therefore more memorable (e.g., Gounden & Nicolas, 2012; Kline & Groninger, 1991). So, a difference in processing between common and distinctive items can be observed during encoding, which is what we found in both experiments. Additional explanations, based on retrieval processes, have been proposed in the literature (e.g., Hunt & Worthen, 2006). Such explanations attribute an advantage of distinctive items to their bizarreness, which can yield cues that are helpful in retrieving items from memory (e.g., McDaniel & Einstein, 1986; McDaniel et al., 2005). For example, if distinctive items provide more visual features than common items, these extra cues can be utilized during retrieval (e.g, Waddill & McDaniel, 1998). As addressed in the introduction, chimeric objects do just that: An office chair with human legs provides at least one additional (visual) cue compared to a common office chair, and objects with multiplied features also present participants with more of such cues. We argued that when encoding-based explanations for the bizarreness effect are studied, such additional cues should be kept under control as they may introduce confounds in experimental designs. In the current experiments we therefore ensured not to add attributes to distinctive stimuli (relative to common ones).

Our results do not rule out such additional retrieval based explanations of the bizarreness effect. Although we focused on processing time, our data may also provide evidence for differential processing during retrieval of secondary distinctive items compared to common items. We explored this by performing additional analyses on response times in the yes/no recognition task in Experiment 1. In the recognition task that was administered immediately after naming, response times for hits show a similar pattern as the response times in naming and in verification tasks (Therriault et al., 2009), as typically colored objects were recognized more quickly than atypical ones. However, in the delayed recognition task administered two weeks later, retrieval latencies (response times for hits) were not significantly different for typically and atypically colored objects. Although further research is needed, we take this interaction between delay and bizarreness to suggest that different processes are at play in delayed recognition as compared to immediate recognition. A possible explanation is that secondary distinctive items are retrieved more quickly than common items in delayed recognition (as is reflected in the recognition scores in Table 2.1), and that this compensates for any slower object identification caused by the atypicality of these items.

We take the present results to indicate that differential processing time at encoding is an explanatory variable in the bizarreness effect, but this does not preclude effects of differences in retrieval. In fact, when the recognition task was replaced by free recall (in Experiment 2), our results were modulated such that recall of typically and atypically colored objects was not significantly different from each other. And while we can not rule out that this is due to floor effects in the number of items recalled, it suggests that some processes that occurred during recognition (Experiment 1), and not during free recall (Experiment 2), were crucial for the memory effect. Also, the correlation we find between processing time in naming and recognition score in memory in Experiment 1 is significant but not very strong, which leaves variation to be explained, for example, by retrieval-based interpretations of the superior memory for secondary distinctive items over common items. Future research may be directed at the question under which circumstances encoding-based accounts explain more of the bizarreness effect than retrieval-based accounts, and vice versa.